Motivation

I guess 2025 is the year I get a 3d printer pic.twitter.com/OzU0MsuT1i

— Jonathan Chang (@ChangJonathanC) January 5, 2025

I finished watching Pantheon at the start of 2025 and really liked it. I liked how relevant it is to the real world right now with the rapid progress of AI. And also, MIST (the intelligent robot character) is very cute!

So I became her hands in the physical world.

— Pantheon, season 1

This quote sounds like fiction, but it’s been true for a while now:

Before Claude Code or Cursor, people used Chat interface for coding. AI would give human the code, and then human would apply the code, run it, test it, and manually curate the feedback and ask AI to debug and fix the code. And people would say AI helped them write code, but from another perspective, the human is doing a lot of the work for the AI.

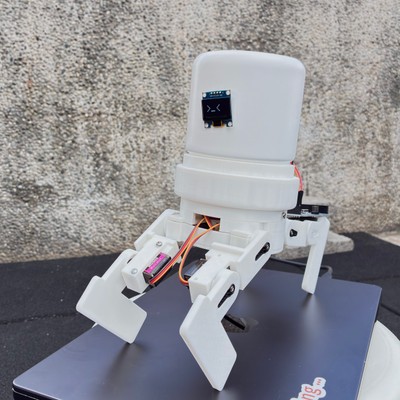

Building a MIST

Inspired by the series, I wanted to build a physical body for my AI as well. And I wanted to see how much Claude could help me build it.

This turned out to be quite a challenging task for me and Claude, and I think it could be a good benchmark for testing how AI can understand and interact with the physical world.

In this blog post I’ll focus on AI and document my journey working on this project. This won’t be a tutorial on how to build it and I won’t share any files. Because I hope one day AI will be able to help you build it, and you won’t need a tutorial. Also, as a benchmark, I simply can’t share the solution.

For future reference, here is a hand-wavy definition of MIST Bench:

MIST Bench measures how well a model can guide a human in designing and building a working robot.

Designing robot with AI

Back in undergrad, I took an introductory HCI class from Prof. Lung-Pan Cheng, where we played with different small hardware projects. It was a lot of fun and was also the seed to my confidence that I could build MIST into reality!

Despite the experience from the class, it was still very challenging to build something from images into reality. Thankfully I had Claude’s help, just like in Pantheon!

Here are some things I tried, some worked and some didn’t work:

3d modeling with AI

Using AI to write CAD code

I asked Claude (3.6 sonnet at the time) to generate simple 3d shapes I wanted. But Claude struggled to even generate relatively simple shapes. So I ended up using Fusion 360 to manually create the 3d models.

Using ChatGPT Advanced Voice Mode (AVM) with vision

I used it to help me navigate Fusion 360 UI when I needed help. And it worked very well. I didn’t need any tutorials. I just needed to point my phone camera at the screen and ask questions.

Image to 3D & AR

I tried using various image to 3D models available in huggingface spaces, and found TRELLIS to be the best at the time. It wasn’t good enough for printing, but was helpful for generating a 3d model I could export to iPhone and use iOS built-in AR feature to visualize MIST on my desk! (as of writing, there are newer and better models available, like Nvidia PartPacker)

Working with AI in the physical world

Assembly

In the end, I did most of the hardware design by myself. I used AIs to help me figure out all the wiring between components. The models are all capable of writing tutorials on how to connect things together, but sometimes it’s still easier to manually look up annotated images on the internet to learn more about wiring. This could be an area for improvement in future LLM agents.

Coding & Safety

Coding is easy for the AIs, but when it comes to physical logic, it can sometimes struggle to debug the issue. For example, when asked to program a sit movement, the model must take into account some servos are installed opposite to another and should have the degree reversed, and the movement has to take into account the existing position, and has to move slowly. Any mistake can cause sudden big movements. There was one time it moved unexpectedly while I was holding the robot and my finger was pinched by the arms. Luckily the servo didn’t have enough power to cause any damage. So be careful how much you trust the AIs, especially in a physical world.

LLMs, Agents, and Robots

I worked on this project on and off over the past 6 months, and AI has improved a lot. Still, they are not very good at interacting with the physical world. And I think making AI interact with the physical world reliably and safely is one of the most important problems to solve.

One of the reasons is that today’s LLMs are trained mainly on text data: they only see the environment through text and they don’t have feedback from the 3d world. (I wrote more about how LLM agents interact with different environments in my previous post on Agent-Environment Middleware.)

LLMs are increasingly trained to become multi-turn tool-using agents, notably ChatGPT o3’s image tool use, CLI tool use with codex-1 and Claude 3.7/4, and very recently, Kimi K2.

RL-trained LLMs are not just better at executing multi-turn tools, but also better at using information from the feedback to perform the tasks. I think we’ll need to train LLMs with RL to use tools in the 3d world, perhaps in simulation during training, and perhaps the tools are the neural network policies for controlling robots.

Conclusion

Before starting to work on the hardware for MIST, I made a simple POC using voice cloning and prompting, and enabled Claude to talk and express facial expressions like MIST:

You can just do things ^_^ pic.twitter.com/hhgSQ93vG8

— Jonathan Chang (@ChangJonathanC) January 16, 2025

The MIST robot is far from complete yet. Currently it can only move a little without tipping over, and it doesn’t have any AI in it. I hope to do more hardware project in the future and improve the MIST robot. ^_^